How Gemini’s Multimodal AI Could Empower Smart Glasses

- May Mei

- May 16, 2025

- 3 min read

Background

This article is part of our ongoing internal research exploring how existing multimodal AI solutions can enhance the functionality and real-world performance of smart glasses.

After reviewing several domestic AI platforms, I’ve now shifted my focus to international solutions — starting with Google’s Gemini.

Following a hands-on reflection with Google AI Studio and their official materials, I’ve compiled the following insights on how Gemini’s multimodal and on-device capabilities could empower AI-powered eyewear.

1. Multimodality: The Cornerstone of Intelligent Interaction

Gemini AI's strength lies in its ability to process and integrate multiple types of data:

Vision: Utilizing cameras on smart glasses, Gemini can interpret visual information—identifying objects, reading text, and recognizing faces.

Audio: Through built-in microphones and speakers, it can understand spoken language and provide auditory feedback, enabling natural voice interactions.

Text: Gemini can read and comprehend textual information in the environment, such as signs or menus, and present relevant data to the user.

By fusing these modalities, Gemini offers a cohesive and context-aware user experience, moving beyond isolated data processing to holistic understanding.

2. Advanced Reasoning and Contextual Understanding

Beyond simple data recognition, Gemini excels in contextual reasoning. It can infer user intent and provide pertinent assistance based on the combined inputs of what the user sees, hears, and says. This enables more sophisticated interactions, such as proactive suggestions and personalized responses, enhancing the utility of smart glasses in daily life.

3. On-Device Capabilities with Gemini Nano

For smart glasses to be truly effective, they must operate efficiently without relying solely on cloud connectivity. Gemini Nano addresses this by enabling on-device AI processing, ensuring low latency and improved privacy. This allows for features like real-time translation, immediate object recognition, and context-aware prompts to function directly on the device, providing a seamless user experience.

4. Generative Capabilities for Dynamic Interaction

Gemini's generative AI capabilities empower smart glasses to not only process information but also generate content. This includes summarizing conversations, creating textual content based on visual inputs, and offering creative suggestions, thereby transforming smart glasses into proactive assistants.

Potential Applications of Smart Glasses with Gemini AI

Imagine smart glasses that can:

Real-World Search & Information Overlay:

Identify a plant and provide care instructions.

Offer historical context when viewing a landmark.

Translate foreign menus and suggest dietary options.

Real-Time Assistance & Productivity:

Guide users through assembling furniture with visual cues.

Set contextual reminders based on location.

Transcribe meetings, identify speakers, and summarize key points.

Enhanced Communication:

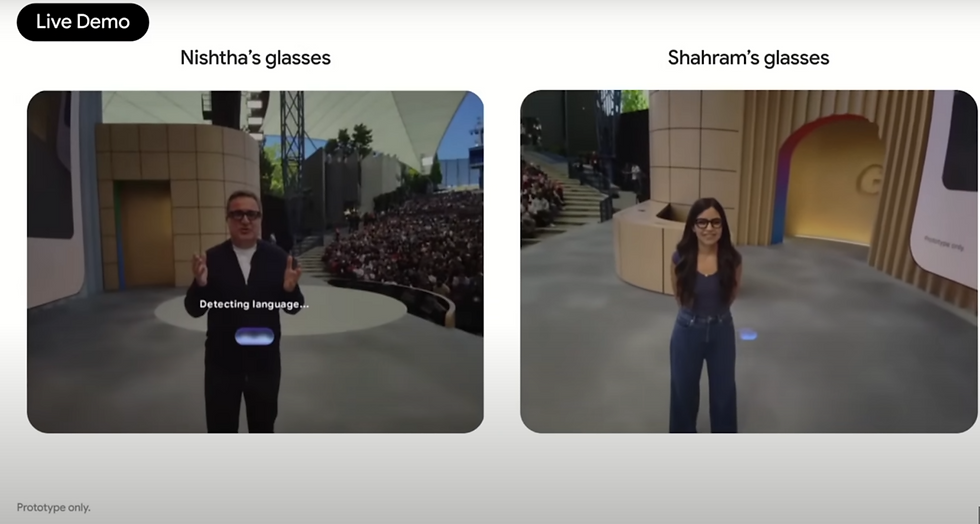

Provide real-time translation during conversations.

Offer social cues and reminders about previous interactions.

Accessibility:

Assist visually impaired users by describing surroundings and reading signs.

Provide real-time captioning for the hearing impaired.

Learning & Exploration:

Identify constellations in the night sky.

Deliver information about museum exhibits upon viewing.

The Road Ahead

The integration of Gemini's multimodal AI into smart glasses signifies a pivotal advancement in wearable technology. By enabling devices to understand and interact with the world in a human-like manner, we're moving closer to realizing the full potential of augmented reality. While fully autonomous smart glasses are still on the horizon, the foundational technologies are rapidly maturing, promising a future where our smart eyewear doesn't just see the world—it understands it.

🔍 Additional Notes

Gemini Nano Access via Android AICore Google is making Gemini Nano available to Android developers through Android AICore, a new system service introduced in Android 14 and later. This service enables access to on-device foundation models — including Gemini Nano.

Developer Readiness and Future Potential While hardware limitations remain a challenge for smart glasses, it's worth noting that if those constraints were resolved, developers working with Gemini today could theoretically build versions of most of the AI-powered features described in this article.

📝 Author’s Note

This article was developed based on an in-depth reflection using Google AI Studio and publicly available product demonstrations. The applications discussed are grounded in the current capabilities offered by Gemini Nano, Android AICore, and the broader Gemini AI ecosystem.

Comments